In a perfect world, GIS data would be meticulously maintained and seamlessly migrate from system to system without a hitch. However, it is often the case that the data has been through a lot over the years… multiple systems, users, and interfaces. This can easily lead to data that is forgotten and no longer used, data that is not always maintained or consistent, and data that has not been controlled by a domain or by other validation rules. When trying to build a new target data model, this presents a large problem:

How do we accurately analyze the data in all its forms?

More importantly, how do we make this analysis human-readable and presentable to the client in a straightforward way? On a recent GIS Replacement project, UDC’s data modeling team found an innovative way to overcome these challenges.

Defining the Data Model

There were several core tasks needed to form the base data model for the client, but few were easy to tackle. We first needed to identify the data elements that were required for the Utility and Pipeline Data Model (UPDM), the standard target data model, and ensure that they were captured in the correct way. We also needed to identify the data elements that were required for future integrations. This company utilizes Maximo, Synergi, transmission integrity management program (TIMP)/distribution integrity management program (DIMP), advanced distribution management systems (ADMS) and various other interfaces that are vitally important to the utility’s operations. Another important task involved identifying those data elements not required to be migrated; any opportunity to downsize the data model and to discard data that is no longer used is significant. Finally, we needed to understand the transformations that the data might take from the source to the target. Key questions here included:

Would it migrate directly as is?

Would it need to be mapped from one domain to another, from one value to another?

Would it require spatial rules, subtype rules, etc.?

Using Metadata Analysis to Address Data Model Challenges

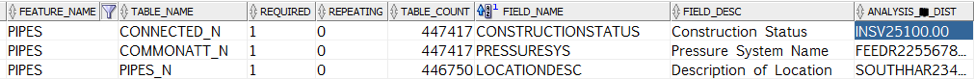

These challenges were all dealt with using a metadata analysis table that allowed for an efficient way to analyze substantial amounts of data in a short amount of time, present that data to the customer, and streamline the data model build effort. This was accomplished using custom SQL procedures to first gather information about the source data model, the metadata, and then analyze the attribute values. Not all attributes were analyzed. For example, internal attributes required by the source GIS, those known to contain a unique values or attributes containing an XY coordinate were not analyzed. The SQL procedure then wrote all the information to a single column for each feature and attribute and displayed by value and percentage of the total.

The analysis data is formatted to list source metadata, e.g. which table and field a value is coming from, as well as other information such as if the data component is required in the source or not, if it is repeatable in the source, and if there is a domain existing in the source. This approach allowed for each value in the source data field to be broken up by geographic area (this utility encompasses more than one state) and facility class (i.e. Distribution or Transmission).

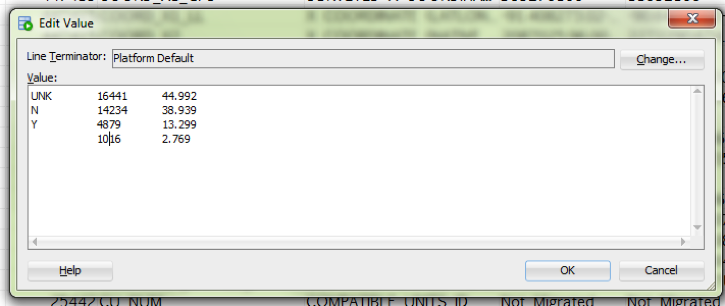

For example, the Construction Status column has the following four values listed by the frequency of occurrence. The last value is null and occurs 1016 time in the data which is 2.769% of the time.

Accurately Assessing Data and Business Needs

The analysis table allowed us to easily identify patterns across features and to make broad assumptions about the data. For example, looking at the analysis results for a field indicating a feature’s Status (i.e. “In Service”, “Retired”, etc.), it was easy to see that almost 100% of the data was populated with valid values for that field, and therefore, the general assumption was made that this field would be migrated for every feature and used in the target model. Alternatively, there were other fields that showed minimal usage and were clear candidates for exclusion. For example, a field describing location was often not populated, and where it was populated the analysis showed small percentages of inconsistent and unreliable data. The analysis table made it apparent that this data was not well-maintained and could be excluded for all features.

The analysis table also enabled us to identify cases where a field was needed moving forward, but the existing data was not; in the analysis, these fields were shown to be sparsely populated or incorrect at the time of migration but were deemed necessary for future use.

The analysis was also an effective way to recognize need for customization. The summary provided allowed the client to identify where Autoupdaters or other customization between interfaces was required based on how the values were being set, and a note was created in the table to record that. When analysis was complete, the table became a repository of migration information that was simple to filter on. This approach is now being used across multiple projects, gas distribution, gas transmission, landbase and electric distribution.

Metadata Analysis – Add it to Your Toolbox

The metadata analysis table is an upfront way to present a detailed breakdown of source data to the client. It shows clear evidence of the current business practices within a GIS system. It is easily communicated across all teams involved in a migration; it can be viewed by anyone with Oracle access, exported as an Excel spreadsheet, or categorized into a high-level mapping instead of a detailed analysis. Plus, it is easily repeatable across different disciplines.

We had a very tight schedule for the migration and without this detailed analysis tool we would not have been able to meet our deadline or to synthesize so much information within such a brief period. There is rarely going to be a completely clean migration project; however, using an analysis tool like this one can certainly make the process more efficient and agreeable.

Learn more about UDC’s Business Process Support services or contact us to discuss your utility data migration project, our metadata analysis tools, and how we can help throughout your migration journey.