When the data in your company’s geodatabase is altered, the accuracy of that data can have major impacts on your data-driven business decisions and downstream systems. That is why it is important to have in place a complete data quality management process that includes both editing restrictions and built-in validation checks. Setting up your initial checks and balances can be straightforward and assisted by out-of-the-box data review tools. For many geodatabases, however, problems maintaining data quality begin to arise when edits become large-scale and validations are no longer easily completed or managed using the standard tools.

In this blog we discuss how your company can reduce GIS data integrity risk and show how we answered the following questions for our client.

- What can you do when the amount of data that needs to be validated is overwhelming?

- How can you ensure that the integrity of the data is reliable and trustworthy?

Content Overview

Ensuring Data Quality

Step One – Restrict Data Input

In an effort to preserve GIS data integrity and minimize risk, the geodatabase provides features such as, Allow Nulls, Domains, Subtypes, and Default Values, which enforce data accuracy by restricting the variation of the data input. These features allow you to guide the editors in the editing process, but the edits still need to certified against rules that are important to your organization.

Step Two – Certify Edits

Once edits are in place, applying validation rules allows the user to check that the provided data meets the standards specified by the organization. Validation checks support the user to verify accuracy before accepting changes to the GIS.

Experiencing Paralyzing Quality Control?

In our client’s Linear Referencing model, a simple feature study can result in hundreds to thousands of features being altered. Validating those edits can be a daunting task that is next to impossible, although critical to ensure accurate data integrity. In many cases, a ready-made data review tool can be used to accomplish validating the data, however, significant performance concerns can arise when it comes to validating large amounts of data. The major concern for our client was that it would take 40 hours to complete running all of the required validation jobs for a singe P_Pipe from a feature study.

Create Custom Error Checking and Validation

So, what can you do when the amount of data that needs to be validated is overwhelming?

Our answer to this question…create a custom suite of validation tools that is easy to use and fast. Did I say fast? I meant extremely fast.

To help our client meet their validation challenges, a suite of tools created on the ArcGIS Pro 2.1 platform using an Oracle 12g database was designed as the primary means for validating data within a version.

The validation tools were divided into two tiers:

- Database – The database is used to hold configurations for the validation rules, and SQL is used to run the rules.

- Application – The application is used to provide the user with an user-interface for viewing the changes within a version and validate those changes.

The back-end (being the database) of the suite, is the brains of the operation, as it handles reading the validation rules from a configuration table and executing the rules for a set of records. When the checks are completed, they are written to an output table that is then read by the application to be displayed to the user.

The supported validation rules are divided into purposes:

- Field Validations – Such as Allow Nulls, Domains, Subtypes and Default Values

- Shape Validations – Such as Nulls, Overlapping, Multipart, and Intersecting

The front-end (being the ArcGIS Pro Add-In) of the suite, is used to provide the editors with a user-interface that allows them to view and validate all of the changes within the version. This is accomplished by showing all of the features and tables within the version that have been altered (i.e. inserts, updates or deletes), and the differences by column for the selected record.

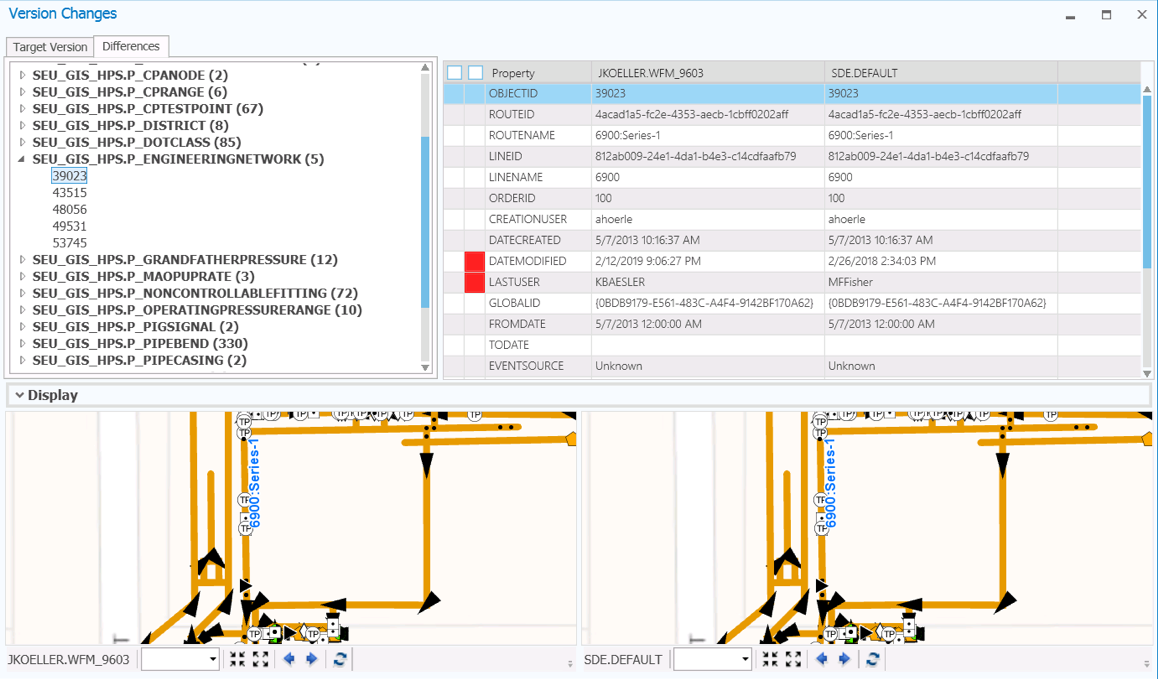

SAMPLE: VALIDATION TOOL RESULTS

There’s a lot to unpack in the screenshot, but it’s much simpler than it appears.

TOP LEFT: The left-panel Differences tab shows the features/tables that have been edited within a version, and the corresponding record counts.

TOP RIGHT: The right-panel table shows the values between the edit and parent version based on the columns for the selected record. The red cells indicate the columns that contain differences, and you may filter to see only those columns that contain differences by ticking the checkbox in the header.

BOTTOM: The bottom-panel Display shows the visual representation of the differences between the edit and parent version geographically.

Ensuring Reliability

How can you ensure that the integrity of the data is reliable and trustworthy?

Our answer to that question… the suite of custom validation tools allows the editor to run all of the required validations for the features that have been altered within a version (within an acceptable amount of time). In an effort to illustrate the answer, following is a simple example of a feature study that was loaded, which caused over 1,100 features to be altered in some manner (attribute or geometry).

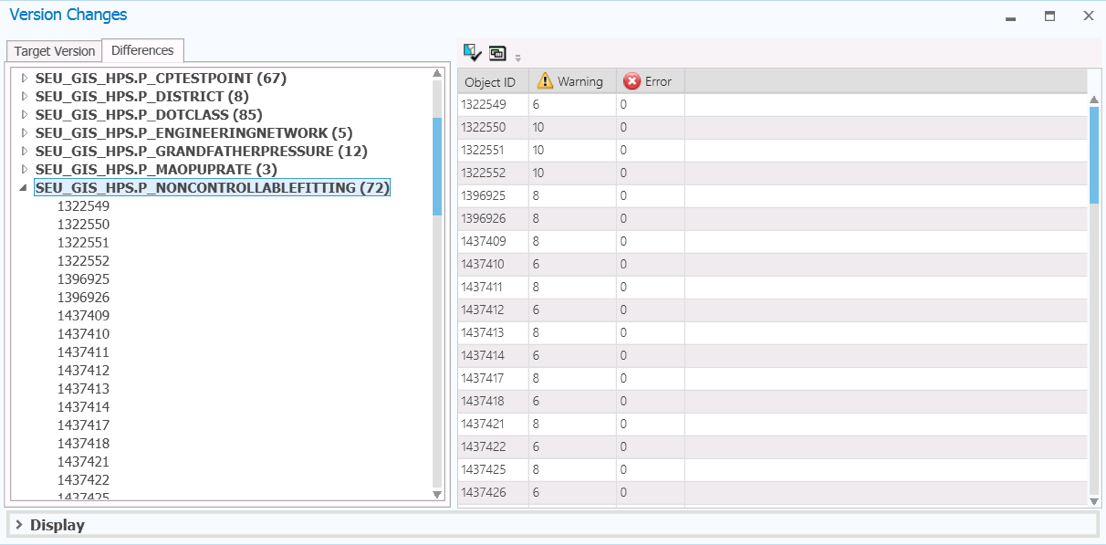

When the editor needs to validate the integrity of the data for the P_NonControllableFitting features, they choose the corresponding feature title in the left-panel of the application and click the Validate button. This will cause all of the validation rules for the P_NonControllableFitting to be executed (via SQL) for the 72 features.

SAMPLE: FEATURE VALIDATION RESULTS – OVERVIEW

The results of the checks are gathered and correlated based on the columns and summarized. The overview-panel shows a quick visual view of the features based on severity (i.e. Error or Warning). The severity is controlled by the validation rule, and can be easily changed by updating the configuration table.

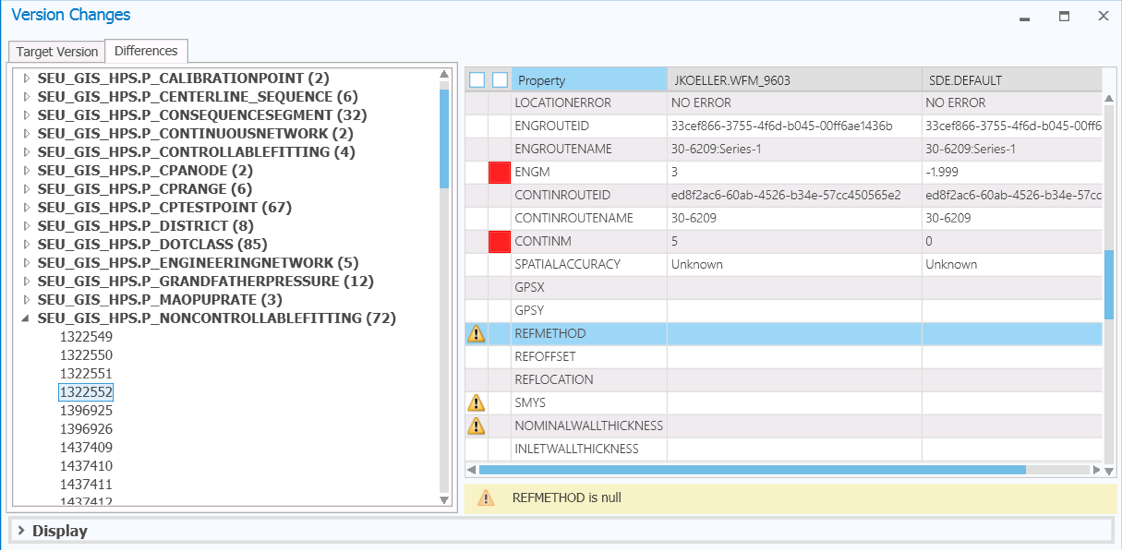

SAMPLE: FEATURE VALIDATION RESULTS – DETAIL

The right-panel is updated to include Error or Warning flags, and the reason that details the problem. You may filter to see only those columns that contain validation problems by ticking the checkbox in the header.

More About Custom Error Checking and Validation

The new suite of error checking and validation tools greatly enhances how our utility client manages large-scale edits within their linear referencing system. The tools are key to ensuring GIS data integrity and reducing risk for the company. Although designed for our client’s particular large-scale editing needs, the logic behind UDC’s validation suite can be applied to other gas and electric GIS based systems where editing efficiency and accuracy have become a concern. We invite you to contact us to learn more about building custom error checking and validation for your utility.